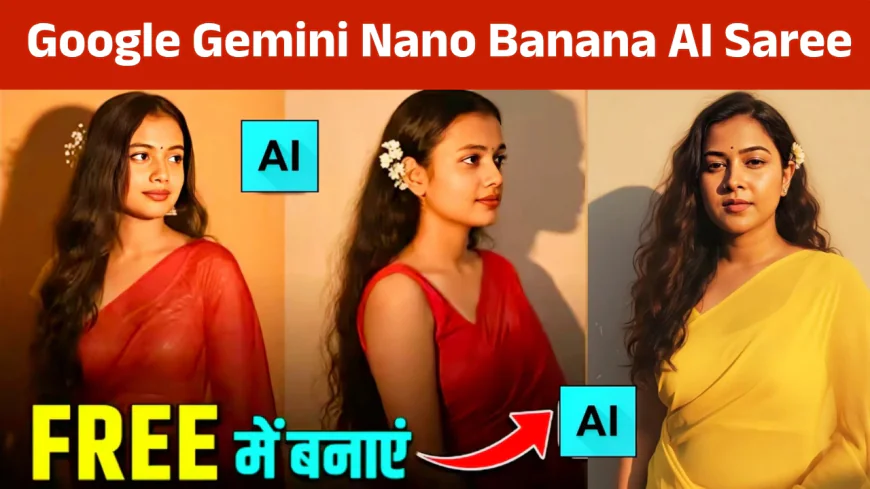

“When AI Sees More Than I Do”: Gemini’s Nano Banana Saree Trend Gives Unexpected Chills

An Indian woman is startled by AI after a "Nano Banana AI saree" trend caused by Google Gemini adds a mole on her that she doesn’t have. Social media safety alarm bells are ringing.

The Trend That Started It All

The GeminiNano Banana AI edit has rapidly become the new craze for Instagram. Ordinary photos are submitted by the users who then watch the change in their works into a grand vintage-Bollywood saree scene, indicating the time when gold light, sediments of the year, and wind blowing are shown.

The Mole That Wasn’t Wanted

Unfortunately, the change was just not enough for Jhalakbhawani, an Instagram user. She put a photo of herself in a green full-sleeved suit on the web.

The return of the AI-created image happened to show her in the wrap and with a mole on the left hand of the image—right there, where she says that she really has a mole, but the original image was not. She commented:

“How did Gemini know that I have a mole on this part of my body? … It’s very scary and creepy.”

Public Response: Fear, Curiosity & Doubt

Social media filled quickly with responses. The majority were terrified and tried to alert others to the dangers of what they give to AI. There were skeptics among them who thought she may have done the editing herself or the AI may have guessed right. Some were suggesting that she was deliberately faking the scare to get more followers.

What Protections Does Gemini Offer?

Google has forged in protective necessities:

● SynthID watermark and metadata tags to identify the source of AI-generated images.

● These marks are not visually prominent but can be revealed by using certain software.

Nonetheless, the riddle: those i.d. utilities are not in the open. So the common user can’t readily outwit if a photo is faked or total AI creation. Also, experts highlight that watermarking may not be enough to stop abuse.

The Bigger Picture: Privacy in the AI Age

The case with AI raises such questions:

● How much data do AI models really “know”?

● Are they picking from private photos, discovering patterns, or learning from user uploads in a completely new way?

● What is the responsibility of the tech community versus the user in the safeguarding of the identity and likeness?

What Should Users Do?

● Take care not to upload a photo, especially one with clear and personal features.

● You should first understand the policy of the platform with respect to data usage, image storage, and AI model training.

● Try using the tools if they are at your disposal to detect the presence of watermarks or metadata.

● Do not hesitate to express your concerns. The firms involved in this matter can only become more open if the public asks them for it.

The Final Speech:

The USNano Banana trend from 15 September 2025 is no longer just a fashion joke, but it keeps on signaling that AI is not only reflecting the user. In certain cases, it uncovers or fakes characteristics that are not even implied.