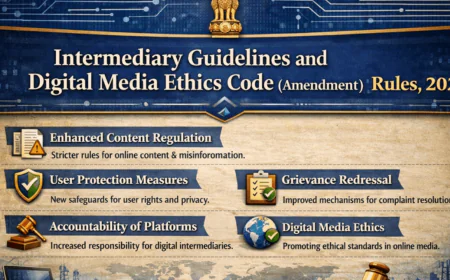

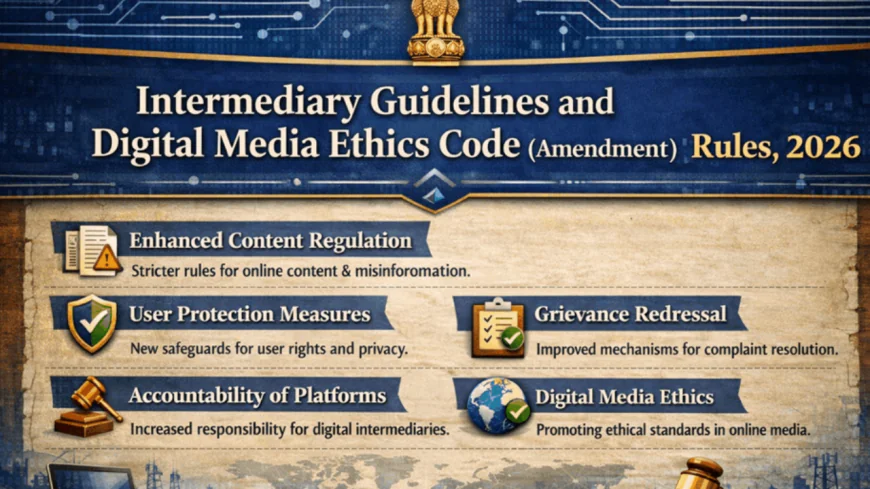

IT Intermediary Rules Amendment 2026 Enforces Strict Social Media Content Removal Deadlines in India

The IT Intermediary Rules Amendment 2026 introduces a 3-hour content removal deadline, 2-hour deepfake takedown rule, mandatory AI content labelling, and stricter safe harbour compliance under Section 79 of the IT Act.

The IT Intermediary Rules Amendment 2026 has been officially notified by the Government of India and will come into force on 20 February 2026. All key social media intermediaries in India. The new IT Intermediary Rules Amendment 2026 sharply reduces the timeframe for removing unlawful and harmful content. From 20 February 2026 onwards. On any digital platform that is under the jurisdiction of the IT regulatory laws in India. It was launched to enhance digital responsibility, combat deepfakes, and have quicker redress of grievances in a more AI-centred online ecosystem. The modified structure revises the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules and substantially increases compliance requirements of intermediaries.

Radical Decrease in Content Takedown Timelines

A major highlight of the IT Intermediary Rules Amendment 2026 is the introduction of a strict, tiered takedown system under the broader framework of the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules Amendment 2026. By the new provisions, platforms are required to remove illegal contents within three hours when requested by the court or a government agency that has the power to do so. This is a drastic decrease since the window used to be 36 hours. The IT Intermediary Rules Amendment 2026 therefore signals a shift toward rapid-response governance in digital regulation. Tougher still is the two-hour timeframe of deleting non-consent deepfake nudity or intimate images. Policymakers argue that this reform within the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules Amendment 2026 reflects the urgency required to address AI-generated abuse. Critics, but the question is whether such platforms can indeed ensure any legal orders and technical authenticity through such constrained timeframes. Also, there has been increased tightening of the grievance redressal mechanisms. The resolution period for user complaints has been reduced from 15 days to seven days, reinforcing the compliance pressure embedded in the IT Intermediary Rules Amendment 2026.

Mandatory AI labelling and User statements

Beyond takedown speed, the IT Intermediary Rules Amendment 2026 imposes structural obligations aimed at AI transparency. The Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules Amendment 2026 now mandates that all synthetically generated information (SGI) must be clearly and visibly labelled. Places have to be coded with permanent metadata and identifiers so that AI-generated content could be traced. It is a step in terms of increasing regulatory fear of false information and artificial manipulation. The IT Intermediary Rules Amendment 2026 therefore attempts to institutionalise accountability in digital publishing ecosystems.

Also, large intermediaries must receive user statements that confirm the presence of AI-generated content, uploaded by them. The Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules Amendment 2026 also obligates platforms to deploy technical verification tools to cross-cheque these declarations. Moreover, instead of once-a-year notice of compliance, now quarterly warnings must be given to users about penalties that will follow breaking digital norms. The IT Intermediary Rules Amendment 2026 thus shifts regulatory culture from passive compliance to continuous user awareness enforcement.

Safe Harbour Risk and Legal Liability Concerns

Perhaps the most consequential aspect of the IT Intermediary Rules Amendment 2026 is the potential loss of safe harbour protection under Section 79 of the Information Technology Act 2000. Failure to meet timelines of removal or requirements of labelling AI by intermediaries puts them at risk of losing protection, which protects them against the liability of the content created by the user. The Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules Amendment 202 therefore introduces direct legal exposure for digital platforms.

This has important implications. In the absence of safe harbour, the companies may be liable to unlawful posts left by users, which will change the nature of the intermediary liability regime in India. Supporters argue that the IT Intermediary Rules Amendment 2026 strengthens national digital sovereignty and victim protection. Opponents respond that this would encourage over-censorship, which would have a chilling effect on the legitimate expression. This tightening of regulations is viewed against the debate on AI governance, the responsibility of the platform and the standards of content moderation around the world. The Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules Amendment 2026 positions India among jurisdictions adopting aggressive compliance frameworks in response to technological disruption.

The implementation of the IT Intermediary Rules Amendment 2026 marks a transformative shift in India’s digital governance architecture. By compressing takedown timelines, mandating AI labelling, enforcing quarterly user warnings, and threatening safe harbour withdrawal under Section 79, the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules Amendment 2026 significantly raises the compliance bar for social media intermediaries. Whether this strengthens digital accountability or intensifies concerns around regulatory overreach will depend on how effectively the IT Intermediary Rules Amendment 2026 is implemented in practise.