Google Gemini AI cloning attack: Commercial Actors Flood AI System With 100,000+ Prompts

Google reveals a major Google Gemini AI cloning attack involving 100,000+ prompts by commercial actors. Explore AI chatbot security risks, industry impact, regulatory response, and future AI protection strategies in 2026.

In February 2026, Google announced that its highest-profile AI chatbot Gemini was being attacked by commercially motivated attackers trying to recreate its functions by using mass prompting. Anonymous business organisations in need of competitive advantage. There was supposedly more than 100,000 structured prompts that were utilised to obtain responses on scale. During a test run over an extended duration before Google came out. On the digital platforms where Gemini is publicly available. Because the Google Gemini AI cloning attack raises urgent concerns about intellectual property theft, data extraction, and escalating AI chatbot security risks in a rapidly commercialising AI industry.

The Tactical Reason of the Incident

The Google Gemini AI cloning attack was not random experimentation; it reflects a calculated business strategy. Actors tried to reverse-engineer the patterns, outputs, and system behaviour, by repeatedly repeating prompts to Gemini. In 2023 these issues were mainly hypothetical. By 2024, small AI companies started quietly crawling results of their competitors. Structured prompt-harvesting was made more organised by 2025. In the present case in 2026, it is evident that the pressures of monetization have added more pressure. This pattern exposes growing AI chatbot security risks, particularly when proprietary AI models are accessible via public APIs. Largely language models cost companies billions to train, but through querying repeatedly can slowly approximate system logic.

The positive side: It drives companies to enhanced protection.

The negative side: It demonstrates the flaws that exist in the protection of AI systems at a commercial level.

Google says its flagship AI chatbot, Gemini

The Competitiveness of Mass Prompting

Technically, the Google Gemini AI cloning attack reveals how volume can substitute for direct code access. Attackers do not steal the code, but instead emulate training data behaviour by training a large amount of input-output mapping. This Google Gemini AI cloning attack also signals a shift from traditional hacking toward behavioural replication.

From a critical standpoint, the larger issue is expanding AI chatbot security risks across industries. Mass prompting may reveal logic patterns or biases when chatbots are applied in the healthcare sector, finance or government services. As of 2022, the misuse of AI centred on misinformation. The year 2023-2024 was the year of data scraping. Competitive cloning as a more advanced threat has developed by 2025-2026.

The positive side to this is that firms have been investing heavily on the rate-limiting, watermarking outputs, and the detection of anomalies. The adverse meaning is that regulation remains unabreast with innovation, as loopholes in global AI regulation systems.

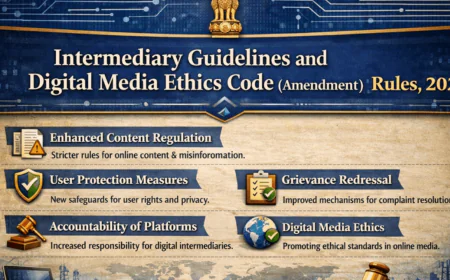

Implications in the Industry and Regulatory Pressure

The broader impact of the Google Gemini AI cloning attack extends beyond Google. This is because in case one large AI system is attacked, the rest are also susceptible. This raises systemic AI chatbot security risks that regulators cannot ignore. AI governance has gained momentum in 2024 in the European Union. In the US, voluntary AI safety commitments were broadened in 2025. Enforcement discourses are becoming more economically focused and sharper by 2026. If the Google Gemini AI cloning attack becomes normalised, AI firms may restrict open access models, limiting innovation ecosystems. Those are the contradictions: increased protection can inhibit cooperation. At the same time, ignoring these AI chatbot security risks could erode trust among enterprise users and investors. The reasonable answer is layered defence - technical protection, contractual limitations and regulatory clarity. Businesses need to be ready to switch to proactive surveillance rather than reactive where commercial gain is the motivating factor.

Ultimately, the Google Gemini AI cloning attack represents a turning point in how AI competition is unfolding globally. It underscores deepening AI chatbot security risks that demand coordinated technological and legal responses. Although the pace of innovation increases at a rapid rate it is important that protection systems also evolve at the same rate. The future of AI will not be defined only by intelligence levels, but by how effectively companies manage AI chatbot security risks in an increasingly competitive digital economy.